FUSION-BASED TARGET RECOGNITION SYSTEMS

WHAT IS IT?

Fusion-based Target Recognition Systems use data-driven techniques to develop and advance machine learning (ML) and artificial intelligence (AI) for the next-generation of multi-sensor fusion-based target recognition systems by leveraging synthetic, scale-model and real sensor data to tackle technology challenges of both maturing AI algorithms and operational challenges. AI and machine learning (ML) algorithms require large amounts of data, to train and assess the algorithm’s performance, which is difficult to obtain from real sensors over a large set of operating conditions.

Multi-INT ATR for Geospatial Intelligence Capabilities (MAGIC) operationally demonstrates multi-INT EO/IR/SAR/HSI exploitation algorithms, with multi-modality fusion of hybrid CAD model-based automated target recognition (ATR) and physics-based machine learning ATR, for real-time detection and identification of targets of interest at the tactical edge. Performance Estimation for Multi-Sensor ATR (PEMS) uses synthetic data to enable sensor exploitation and information fusion research over an extensive range of operating conditions at massive scale and build high-fidelity performance models. Multi-Aperture Reduced-scale Verification and Evaluation Lab (MARVEL) performs multi-sensor measurements on small-scale models to enable rapid innovation and validation of multi-sensor algorithms.

Our research integrates of large amounts of data, machine learning and high performance computing to advance the development of current and next-generation fusion-based target recognition systems and address the Department of the Air Force’s Operational Imperatives.

FACT BOX

- Multi-modality fusion-based automated target recognition (ATR) increases identification of real targets and reduces false alarms from target decoys

- Synthetic and scale models provide large amounts of data for fusion-based systems, unavailable from real sensors, to mature and assess detection and identification capabilities

- High Perfomance Computing (HPC) generates synthetic data and runs large scale machine learning fusion experiments

WHY IS IT IMPORTANT?

Aircraft pilots need accurate and reliable target information quickly to drive decision-making. Single sensor ATR systems are less robust to natural and adversary-induced difficult deployment conditions. Multi-sensor fusion provides robustness as different sensors will have complementary strengths and weaknesses to the varied operating conditions. In addition, fusion can combine information from current-generation sensors to enhance the war-fighter’s capability, significantly exceeding performance of single-sensor ATRs. Furthermore, the transition of this fusion capability is far more timely than next-generation sensor capability.

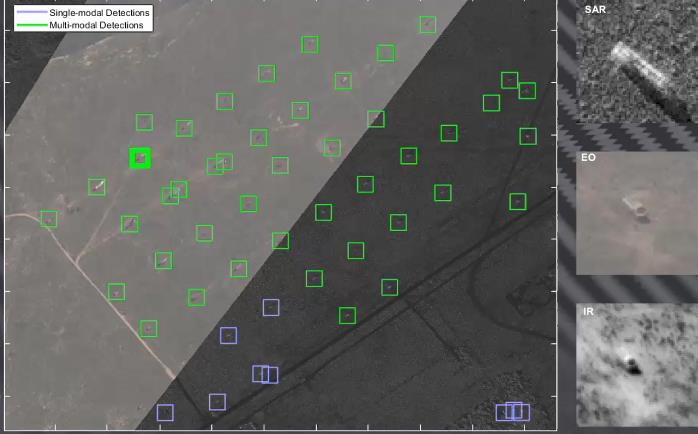

Single modality (blue) vs. multi-modality (green) detection results. Credit: BAE

HOW DOES IT WORK?

Fusion-based Target Recognition Systems feed multi-modality results from hybrid model-based ATR and physics-based machine learning ATR into a fusion algorithm that provides flexible decision-level fusion and high performance feature-level fusion to deliver real-time detection and identification of critical mobile targets and theater ballistic missiles under camouflage, concealment and deception.

ADDITIONAL FACTS

The Autonomy Technology Research Center (ATRC) provides foundational research for Fusion-based Recognition Systems where summer interns complete a mentor-guided project on a high-risk, high-payoff idea to address the fundamental challenges of ATR, fusion and autonomy. The ATRC accelerates development of ATR technology, provides a principled way to perform fusion research and creates vested, vetted, technically-ready, hire-able interns; over 50% graduate to work for the Air Force or another DoD organization (gov/ctr).

The large number of possible operating conditions require the use of synthetic or scale-model data to understand how variation in one or more operating conditions impacts AI/ML algorithm performance within MAGIC and other fusion engines. Gaps in current target recognition capabilities as well as explicit customer needs drive experiments on synthetic data in PEMS, scale model data in MARVEL and ATRC projects.

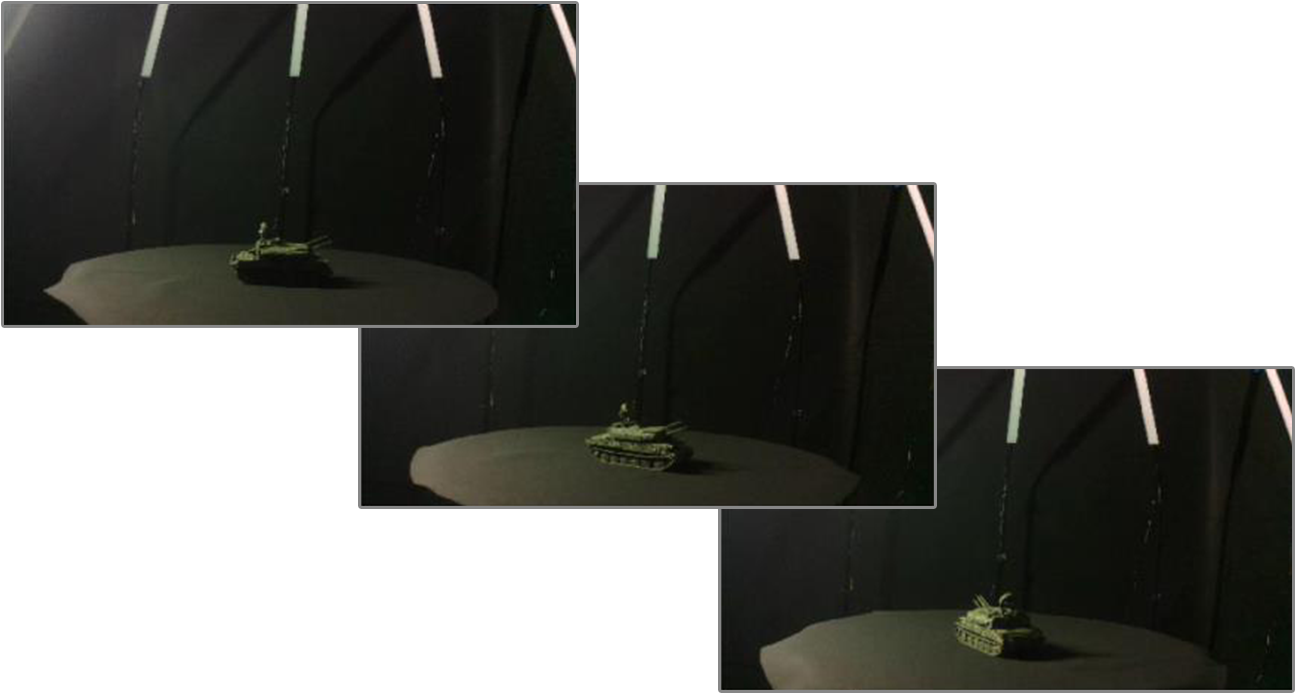

A recent effort characterized how time of day in the algorithm’s training data impacts AI/ML algorithm performance in different EO/IR bands under different lighting conditions missed in existing measured data.

- The first experiment used synthetic data in PEMS to look at whether more training variety improved performance and whether training data generalized well. The experiment demonstrated that the classifiers generalized poorly without any exposure to certain lighting conditions, but sparse sampling across all lighting conditions helps significantly.

- A second experiment in PEMS showed that fusing results from VNIR and MWIR sources improved results over single modality performance for multiple lighting conditions. In-particular, MWIR compensates for poor VNIR performance in low-light conditions, like nighttime, and VNIR compensates for MWIR target/ background “blending” in early morning.

- Finally, MARVEL scale-model data was used to quickly assess enhancements to MAGIC EO modeling capabilities. The experiments validated enhanced MAGIC EO ATR approaches across multiple times-of-day. The results demonstrated substantial ATR performance gains using enhanced modeling.

Scale-model data collected in varying lighting conditions. Credit: Paul Sotirelis

ABOUT AFRL

The Air Force Research Laboratory (AFRL) is the primary scientific research and development center for the Department of the Air Force. AFRL plays an integral role in leading the discovery, development, and integration of affordable war-fighting technologies for our air, space, and cyberspace force. With a workforce of more than 11,500 across nine technology areas and 40 other operations across the globe, AFRL provides a diverse portfolio of science and technology ranging from fundamental to advanced research and technology development. For more information, visit: www.afresearchlab.com.