STARS

SAFE TRUSTED AUTONOMY FOR RESPONSIBLE SPACECRAFT

KEY TECHNOLOGIES

- Reinforcement learning-based AI neural network control for multi-spacecraft operations

- Safe close-proximity operations using run-time assurance technology to maximize mission life

- Human-autonomy interfaces for operator delegation of AI/ML control with preferences

- Terrestrial multi-satellite testing facility

WHAT IS IT?

What would it take to put a neural network control system on a spacecraft? What advantage might this technique provide to respond rapidly in uncertain and anomalous situations? How do we guarantee safety? How will the neural network-based autonomy interact with United States Space Force (USSF) Gaurdians? How do we test satellite autonomy in a cost-efficient way?

There is a paradigm shift in the USSF and SPACECOM toward providing services in space. STARS seeks to address this concept by leveraging state-of-the-art artificial intelligence/ machine learning (AI/ML) technologies. However, these tools are still untrusted in the community writ large and platform (or missions) level autonomy is still untrustworthy to operators due to uncertainties, opaqueness, brittleness and inflexibility. To unleash the full disruptive capability of autonomy, safety must be incorporated to gain such trust.

STARS has the following objectives:

- Develop reinforcement learning-based neural network multisatellite control and decision-making algorithms

- Develop run-time assurance approaches that mitigate hazards and allow the autonomy to stay on mission

- Develop flexible human-autonomy teaming

Each of these three objectives impose constraints on the other objectives and there are tradeoffs.

Local Intelligent Collaboration of Networked Satellites (LINCS) Laboratory

HOW DOES IT WORK?

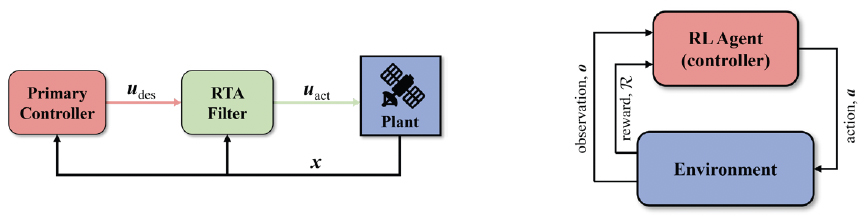

AFRL is using machine learning tools called Reinforcement Learning (RL) to train intelligent agents to take actions in an environment, with the goal of maximizing an overall, long-term reward. RL is based on the psychology concept of operant conditioning that, for example, could be used to train a dog with positive and negative reinforcement. RL is used in this project because of its exceptional performance in environments with highdimensional state spaces, complex rule structures, and unknown dynamics. It is difficult to craft a reliable and high-performing solution using traditional, robust decision-making tools. However, RL has demonstrated the ability to create agents that are superior to humans in breakthroughs ranging from board games like Go, real-time strategy games like Starcraft, and in military engagement scenarios like those conducted in Alpha Dogfight.

However, AI is brittle. A mistake by the AI on a satellite can result in the loss of billions of dollars of economic value, priceless data and space-based services, and for the military, the lives of soldiers on the ground who rely on satellite imagery and communications for their missions. Run time assurance can be thought of as a safety filter on the AI control output. It monitors the desired output of the autonomous controller for commands that are anticipated to cause a safety violation and when necessary, modifies or substitutes a control signal that is sent to the environment.

However, AI is brittle. A mistake by the AI on a satellite can result in the loss of billions of dollars of economic value, priceless data and space-based services, and for the military, the lives of soldiers on the ground who rely on satellite imagery and communications for their missions. Run time assurance can be thought of as a safety filter on the AI control output. It monitors the desired output of the autonomous controller for commands that are anticipated to cause a safety violation and when necessary, modifies or substitutes a control signal that is sent to the environment.

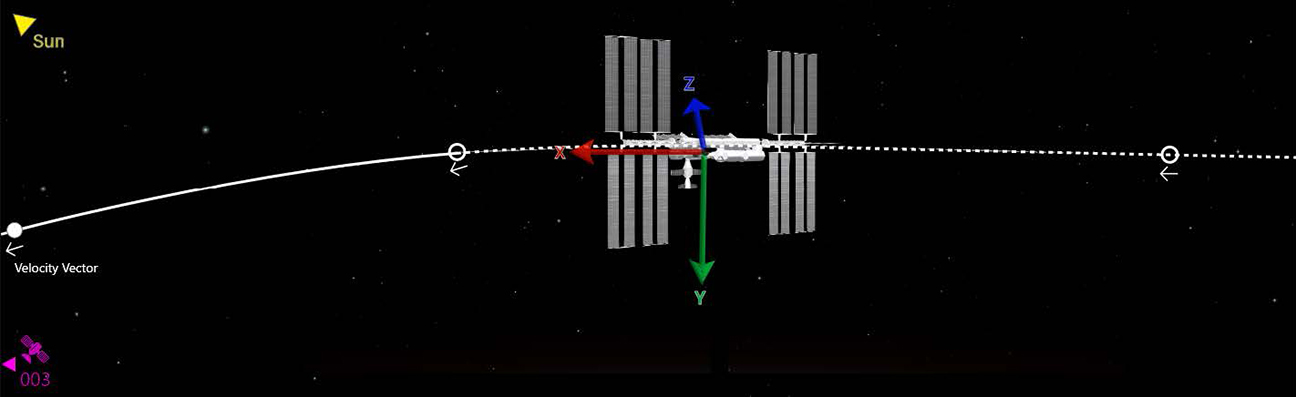

Showcasing the actions and safety concerns to a Guardian operator is paramount to improving the trust in the algorithms. Since operators cannot physically see the actions of a satellite, they are limited to telemetry downlinking of information. Moreover, mission planning is a large endeavor for satellite operations. These tools aim to couple mission planning and operations for multi-vehicle close-proximity interactions.

Concept design of the mission planning and operator’s tool